SynthLab was designed from the ground up to follow an audio block processing paradigm that is relatively standard in the industry. The audio is rendered in blocks rather than one sample at a time. When you create the SynthEngine object, you initialize it with the maximum block size in frames (one sample per channel, e.g. 64 stereo frames occupy 64 sample intervals but produce two samples per interval). During the render operation, you also tell the engine how many samples of this maximum size are valid (require audio data) in case there are partial blocks to render. The SynthEngine will render audio samples in any amount up to the maximum, so partial blocks do not need special handling or buffering. If the user chooses a buffer size that is smaller than the engine's maximum, then these will be considered partial buffers, and will be rendered as normal; partial buffers are not optimal but are allowed.

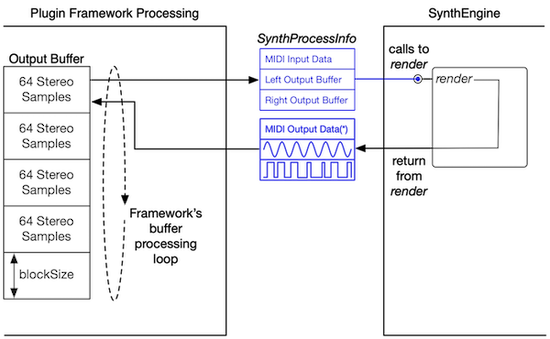

The blocks of audio are usually short, 64 samples/channel or less, to maintain the tactile response of the instrument. During the render phase, the plugin framework prepares a SynthProcessInfo structure for the SynthEngine and passes in that structure during the render( ) function call.

The SynthProcessInfo structure:

- contains pointers to buffers for the engine to fill with freshly rendered audio data

- includes a vector of midiEvent structures that encode the MIDI messages that occurred during that audio block time

- contains fundamental DAW information about the current session; the BPM and time signature numerator/denominator

In Figure 1, you can see how the framework sends blocks of empty buffers to the engine along with a chunk of MIDI messages that occurred during that block. The engine returns the output buffers filled with freshly rendered audio, which the framwork writes into its output buffers. The process repeats as the render cycle. Notice that this structure does not contain parameter information from the GUI. The GUI update part of the render cycle occurs just prior to the render( ) function call and covered in the previous section.

Figure 1: the render cycle showing the relationship between the plugin framework and engine

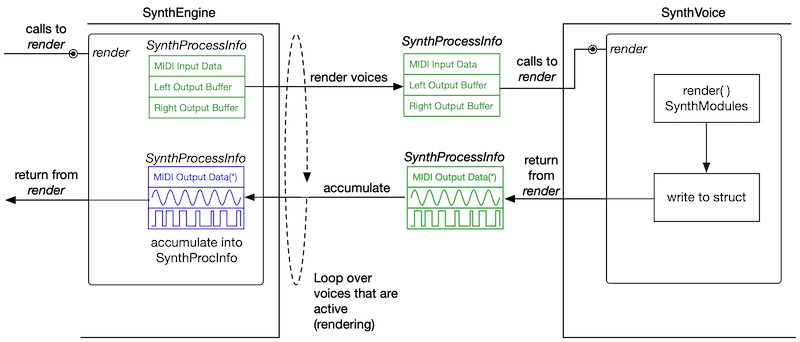

In a likewise manner, the engine prepares a single but separate SynthProcessInfo structure to deliver to the SynthVoice objects that are "active" meaning that they are rendering audio for a note-event. Figure 2 shows the relationship between the engine, voices, and the SynthProcessInfo structures used during the function calls. The engine receives the SynthProcessInfo structure from the voice's render() function return, and accumulates it into the original SynthProcessInfo that the framework delivered. The voice does not use the SynthProcessInfo when rendering audio from it's SynthModule members. Instead, it uses the simpler AudioBuffer object.

Figure 2: the engine uses a separate (green) SynthProcessInfo structure when calling the render() function on its active voices

The code below shows the SynthProcessInfo struct defintion. Take note of the following:

- it is derived from AudioBuffer, and inherits its input and output audio buffer arrays from this base class

- it contains the MIDI event queue and helper functions to allow the framework to push fresh MIDI messages into it, prior to the call to the engine's render() function, and then clear out the messages once the render operation is complete.

- the DAW data are just a set of simple members (BPM, time signature)

- your framework should decode and send the absoluteBufferTime_Sec from the DAW via this structure's member, though the SynthLab example projects do not need or rely on this information

- there is a preferred constructor that accepts the input/output channel counts and block size to setup and intialize its internal AudioBuffer arrays for operation

It is important that you understand this structure because your plugin framework code will need to prepare that structure, load it with MIDI events, and pass it to the engine during render() calls in a sub-block processing loop within its own audio processing function in Figure 1.