SynthLab includes two high-level objects that do not have base classes, and do not inherit from SythModule. The SynthVoice and SynthEngine allow you to combine the smaller SynthModule building blocks into a fully operational polyphonic software synthesizer. And, these two objects may be used alone, and without any of the SynthModules. You can use these two objects with your own synth components as well. The engine will decode incoming MIDI messages and deliver them to the voice which renders the notes. The engine mixes the output of the voice objects and adds master bus effects (if any), then ships the resulting audio back to the plugin framework.

Big Picture

The SynthVoice:

- creates and maintains a set of SynthModules that it intializes, sends MIDI messages to, and renders audio from

- programs the modulation matrix (if used)

- implements the audio-engine that moves audio data from the oscillators through filters and DCA in response to MIDI note-on and note-off messages

- keeps track of the lifecycle of one MIDI note event, from the intial note-on, to the expiration of the amp EG controlling the time-domain output envelope

- owns a SynthVoiceParameters structure that contains shared pointers to its SynthModule's shared parameter structures

- this SynthVoiceParameters structure is shared across all SynthVoice objects so that all of the identical SynthModules have instant access to the same set of parameters

The SynthEngine:

- creates and maintains a set of SynthVoices that it intializes, sends MIDI messages to, and renders audio from

- creates the MIDI, wavetable, and PCM sample databases that are shared across objects

- creates the SynthVoiceParameters structure that all voices share

- decodes MIDI events, stores CCs and other desired data in the shared MIDI input data array, and calls the note-on and note-off handler of the voice

- is the sole interface object for the plugin framework; your processor object only needs to instantiate and communicate wiht a single SynthEngine object to render a complete software synth

So, the SynthVoice renders audio from note events and the SynthEngine maintains the voices, acts as the centralized hub for shared data, and interfaces with plugin framework.

SynthEngine

The SynthEngine is designed to be a single object that encapsulates a complete synth. Typically, there is one and only one SynthEngine per synth project, but there is no reason you can't have more than one for a multiple-synth product. Your plugin framework use it to render audio output blocks, which the framework then ships out to the DAW. In SynthLab, the engine creates and maintins a set of SynthVoice objects, but that is optional and you may use the engine on its own, without the voice objects.

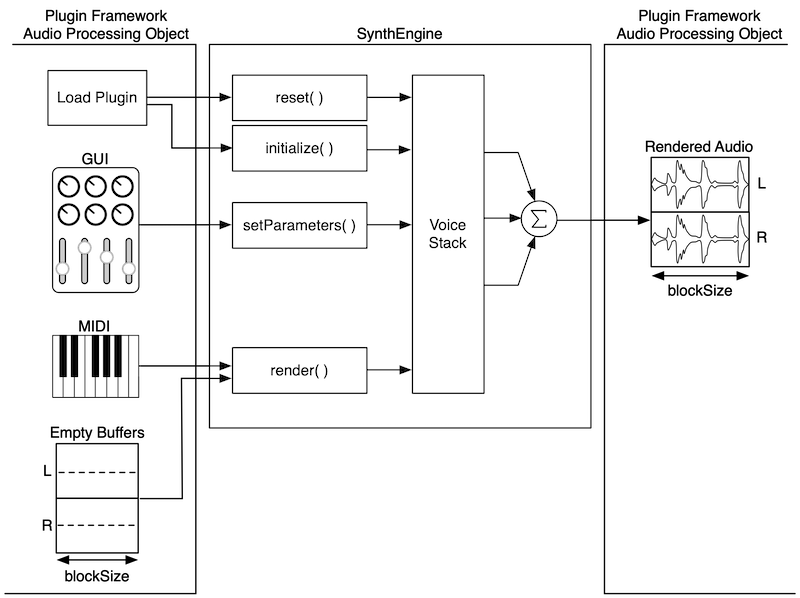

Figure 1 shows how the SynthEngine interfaces with your plugin framework's processing object. Notice that the SynthEngine has a similar but smaller set of opertional phases and functions as the SynthModules and SynthCores from the other sections of the programming guide.

- plugin load/initialization

- GUI update

- audio render

Figure 1: the SynthEngine with relationship to the plugin framework object that owns/controls it

The SynthEngine object in the SDK is setup with some basic functionality:

- accept and decode MIDI messages

- accept a block of empty buffers from the framework

- mix together outputs of voice objects (they do NOT need to be SynthVoice objects)

- apply FX if needed

- return the rendered audio buffers to the framework.

SynthVoice

If you worked through the MinSynth C++ object in the Standalone Programming Guide, then you already have a good feel for the SynthVoice is designed to operate. The MinSynth C++ object held a collection of SynthModules, reset them, sent them MIDI events, and called the render() method on them. It also moved audio data between the oscillator's output buffer and the filter's input buffer. The SynthVoice object is designed with a similar set of functional goals. First, it contains instances of each of the SynthModules in its architecture. Secondly, it mainpulates and controls these SynthModules to follow the operational phases for a software synth as outlined and detailed in the synth book. These phases can be condensed down to three basic functions:

- Initialization: the voice calls the module's reset function

- Note-on and Note-off: the voice calls the doNoteOn and doNoteOff methods on its set of modules

- Controlling Audio Signal Flow: the voice calls the module's update and render functions during each block processing cycle, and delivers the rendered audio back to the engine

SynthEngine and SynthVoice

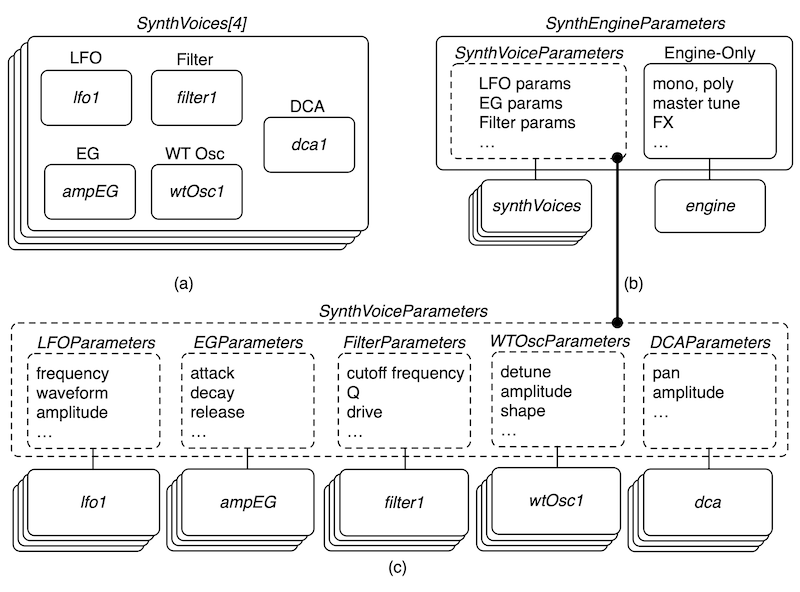

The SynthEngine and one or more SynthVoice objects can be combined together to create a complete operational synth, using the theory and design methods from the synth book. Figure 2 shows how a set of simple synth voices are combined with the SynthEngine to allow for the efficient and safe sharing of MIDI data, wavetable and PCM sample data, and GUI parameters. Note that the theory is deep and explained fully in the synth book so it will not be repeated here. You may use the example synth projects, which only differ in the SynthVoice object and GUIs, as a basis for deeper understanding in absence of the synth book.

In addition to managing the components, decoding MIDI messages and rendering the audio, the engine and voice objects play a critical role in sharing MIDI, wavetable, PCM sample, and GUI parameters across the arrays of objects that are fundamentally identical and respond identically to the GUI control changes. This sharing scheme involves std::shared_ptrs in the engine and voice parameter structures, which are distributed across the SynthComponent objects at creation time. Note that all objects implement a getParameter() method to obtain a pointer to the shared parameters.

Figure 2.3 from the book is shown below that depicts a Voice object that contains one LFO, EG, oscillator, filter and DCA (the same as the MinSynth C++ object from the Standalone Programming Guide). You can see that the relationship between the engine and voice parameters allows the sharing of GUI control information across the voices and their SynthModule components.

Figure 2.3: (a) the engine owns a stack of voice objects (four in this example, but 16 in the SynthLab projects) each of which includes an identical set of LFO, filter, EG, oscillator and DCA objects (b) the engine owns a voice parameter structure that is shared across these voices and (c) the shared voice parameters structure consists of a set of shared module parameter structures

The following sections will give you more information on these high level objects. Notice that they are already set up with virtual functions so you may use them as base classes for your variations.

Voice/Engine Guide